Conversations: Shared Agent Memory across Concurrent Experiences

The Letta API is designed for creating stateful agents that are more akin to digital humans rather than LLMs: they have their own sense of identity and experience, through which they form memory and recall the past. Like humans, Letta agents have so far experienced time as a single thread.

With the new conversations API endpoints, agents can now have multiple conversations concurrently while still having their own individual identity and sense of experience. Each conversation is tied to a specific agent, and experiences within a conversation can form memories which transfer across all conversations.

Conversations enable a single agent to serve as an institutional knowledge manager across an organization's Slack or other communication channels. Each Slack thread becomes its own conversation, with hundreds of threads running in parallel and feeding into a single agent's memory. The agent can search across all conversations to recall relevant information - connecting context from engineering discussions, product meetings, and customer feedback through a single unified memory system.

Conversations in the ADE

Now when chatting with a Letta agent in the ADE, you can create independent threads from the main “default” conversation to additional “side” conversations. Each conversation is still with the same agents, so messages are searchable across all conversations and memory is shared.

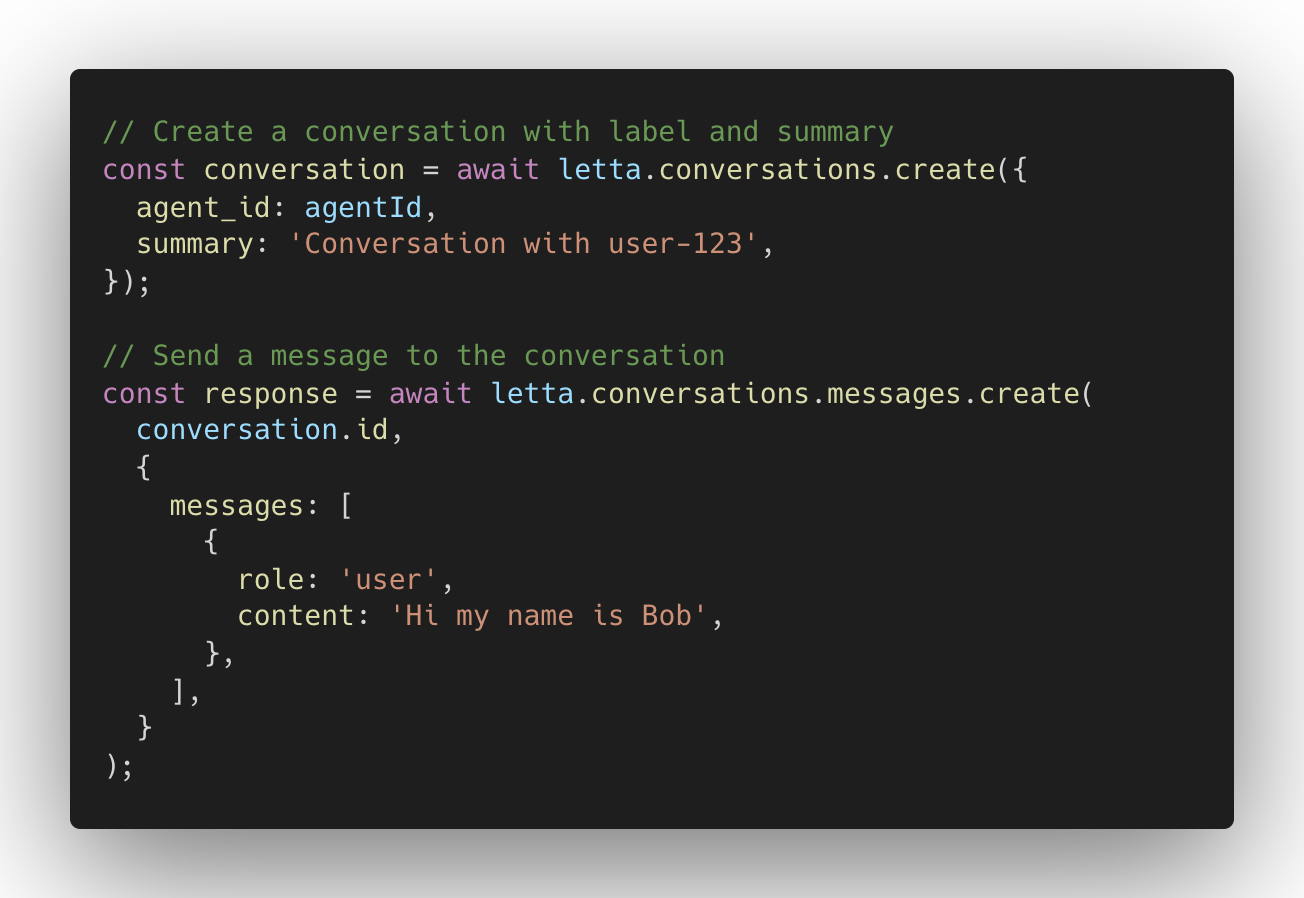

Concurrent Sessions in Letta Code

Letta Code now defaults to using the Conversations API endpoints under the hood. This means you can have multiple concurrent sessions with the same agent, while retaining memory and experience across all of your concurrent Letta Code sessions.

Designing Agents with Conversations

Conversations make it much simpler to support many end-users without having to create individual agents for every user. If you want agents to accumulate memory across user sessions, you may want to use conversations - if you want memory to be isolated, you should still continue to create separate agents.

Conclusion

To try out conversations, install the latest version of Letta Code or view the documentation.