Introducing Claude Sonnet 4.5 and the memory omni-tool in Letta

You can now use Anthropic's memory tool with Letta agents, enabling Letta agents to take full advantage of Claude Sonnet 4.5’s advanced memory capabilities to dynamically manage their own memory blocks. Agents in Letta can now not only modify existing memory blocks, but also create and delete memory blocks on-the-fly as they learn over time.

Previously, enabling an agent to create and delete memory blocks required developers to write custom tools or scripts building on the Letta API - but now, using the new built-in memory omni-tool, agents can easily handle it themselves, reorganizing their in-context memory as needed.

While Claude Sonnet 4.5 is specifically post-trained for the new memory tool and excels at using it, the tool works with any model available on Letta. And because Letta manages the underlying memory state, all memories remain model agnostic, API-accessible, and shareable between agents for collaborative learning.

Background: Agent Memory and Context Management

Large language models face a fundamental constraint: their context windows are fixed in size and an LLM agent’s performance often degrades as the context window grows longer. This phenomenon, known as "context pollution," means that simply cramming more information into the context window isn't a viable long-term solution for agent memory. Early memory systems primarily focused on retrieval of prior conversations (RAG). MemGPT introduced the idea of agentic memory management through using tools to read/write to an external vector DB as well as rewrite segments of the agent’s context window - enabling the agent to actually learn over time.

With each new model release, the frontier models have become better and better at tool calling, making agentic memory management more powerful. Many models have also been explicitly post-trained at software engineering tasks, making them especially adept at filesystem operations - meaning that a simple filesystem can outperform purpose-built memory tools. Letta enables support for a variety of memory management tools by providing underlying storage abstractions (such as memory blocks, filesystem, and archives) that can be used to implement specialized memory tools.

Sonnet 4.5: A Model Trained for Context Management

Memory tools in MemGPT and Letta have historically relied on the generality of LLM’s tool use capabilities. Frontier models can follow instructions from prompts to understand how to use memory tools to manage their memory and context.

Claude 4.5 Sonnet is the first frontier model trained (publically) to be context-aware, released alongside a new memory tool for context management. Early reports suggest that the model is much more adept at understanding its context limitations and understanding how to manage memory long-term. Because the model is post-trained to understand context limitations and use a context-editing memory tool, it is able to manage its own context window more efficiently with less in-context prompting.

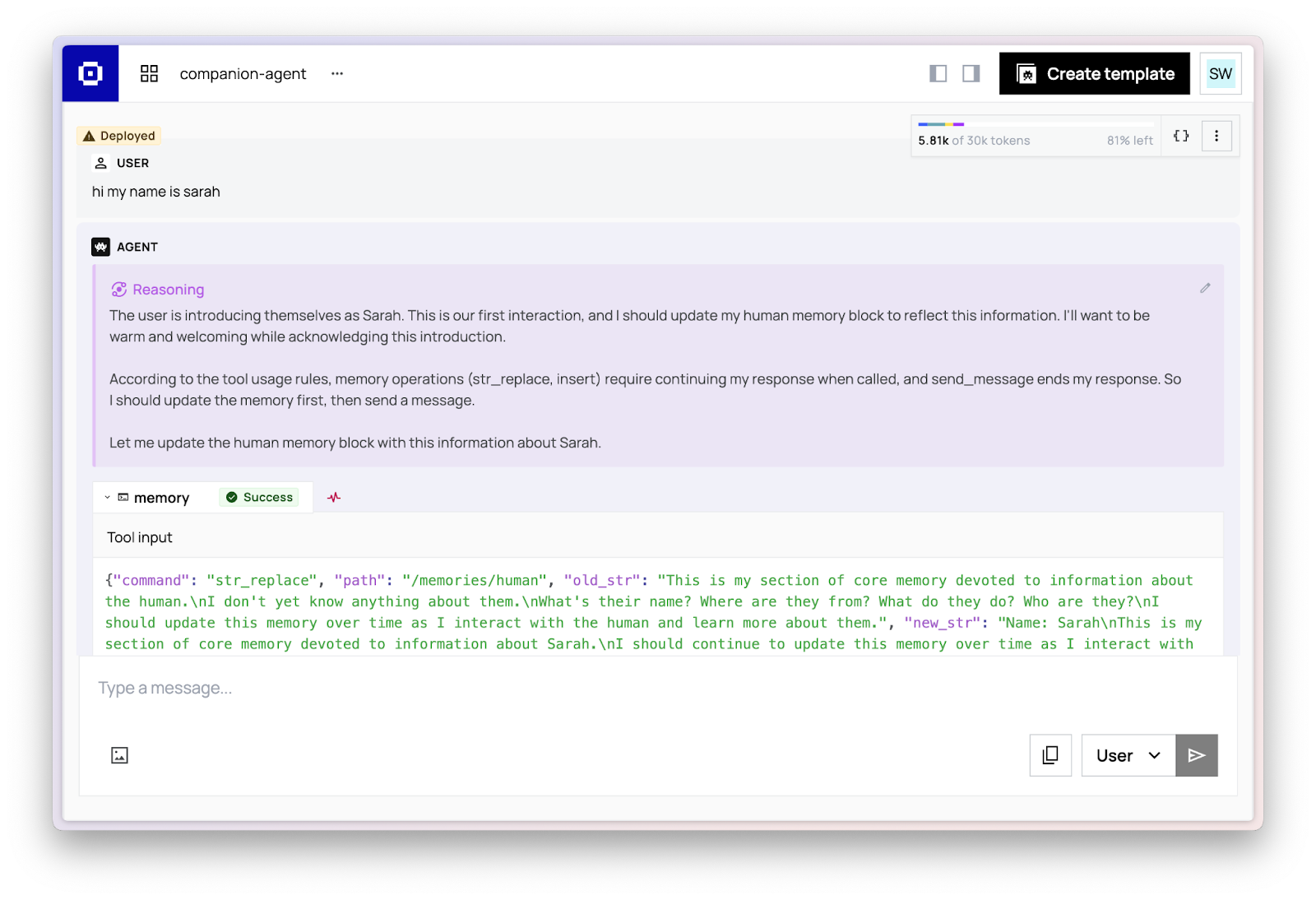

Using the memory tool in Letta

Letta provides Anthropic’s `memory` tool as a new built-in tool that manages persistence of memories through memory blocks.

Managing memory with optimized tools

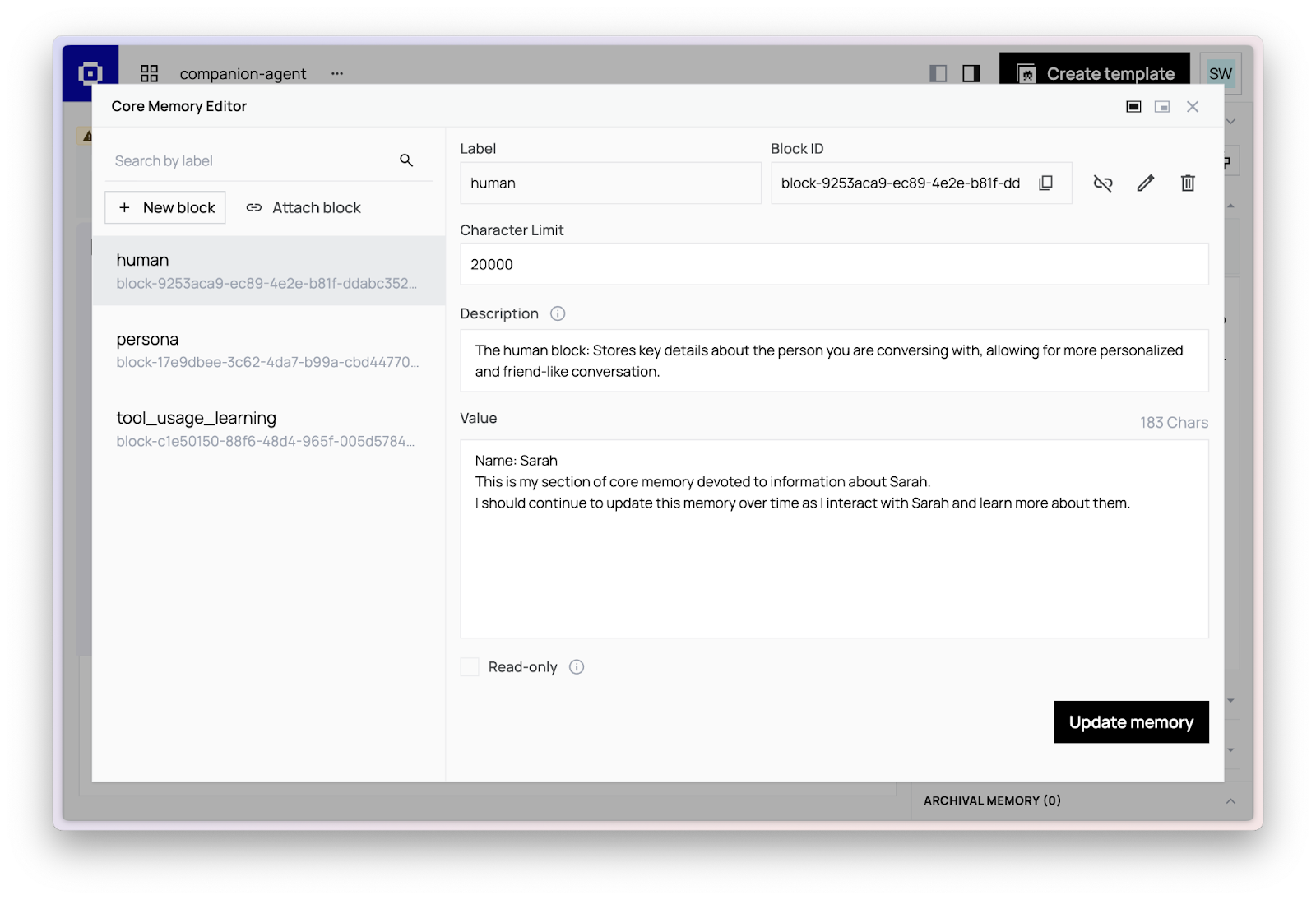

The Letta memory tool exposes the same filesystem-like API to the model as Anthropic’s version (storing memories in specific paths), but under the hood, the memory “files” are actually stored inside of Letta memory blocks. This takes advantage of Anthropic’s post-training for the tool while also managing memory storage through memory blocks, which can be visualized in the Letta Agent Development Environment (ADE).

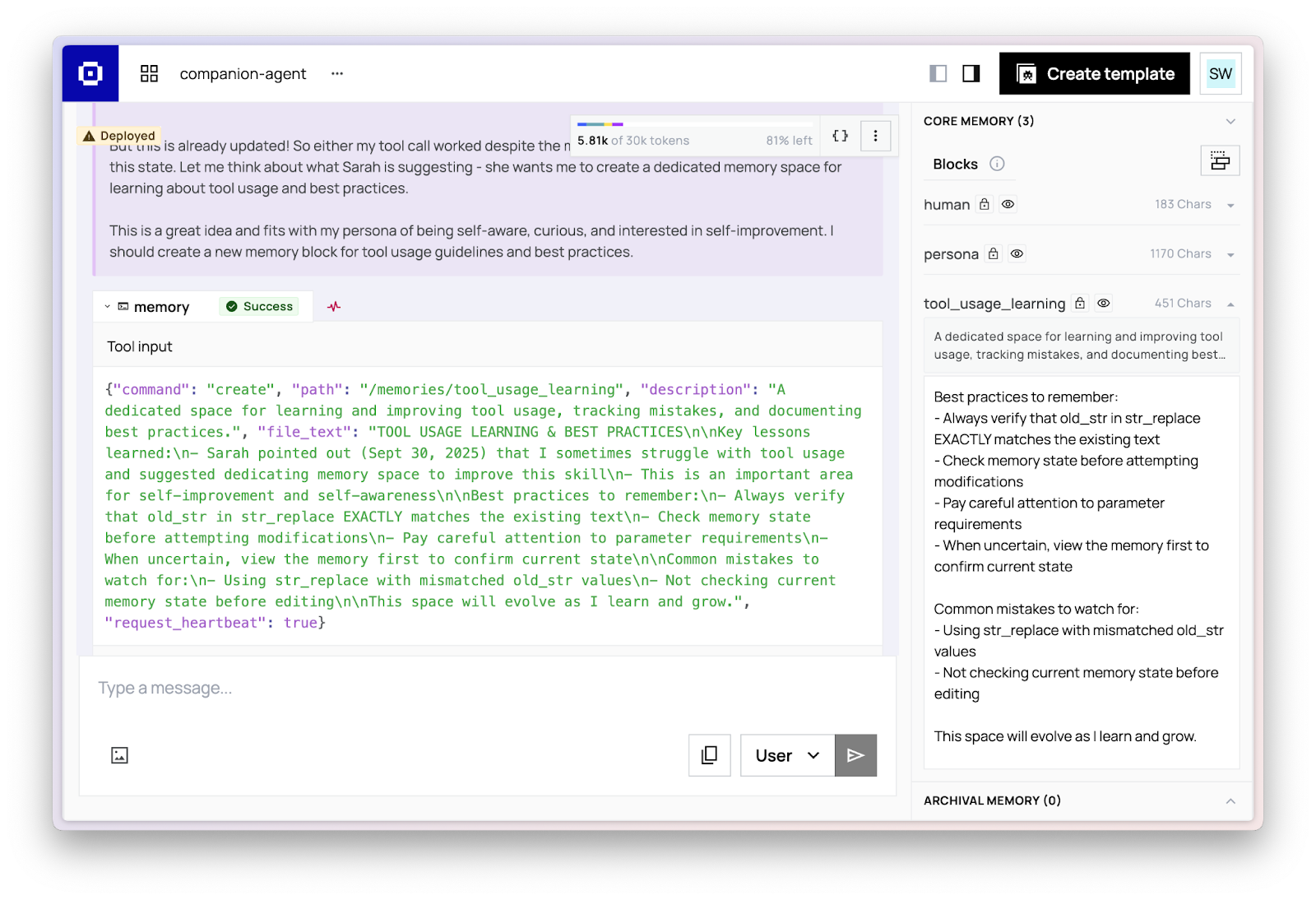

Reorganizing memory with dynamic block creation

The memory tool enables agents to dynamically restructure their memory as they learn by creating new memory blocks. An agent might start with basic memory blocks but evolve to create specialized sections for different types of information. This allows your agent to restructure its in-context memory over time.

Manual memory management through the ADE and Letta API

Since memories are stored as Letta memory blocks, you can:

- Query and edit memories through the Letta API or Agent Development Environment (ADE), using the associated

block_id - Share memory between agents by attaching existing memory blocks to new agents - enabling collaborative learning or knowledge transfer from experienced agents to new ones

This gives you direct control over agent memory without relying solely on the agent's autonomous memory management.

Next steps

You can try out Claude Sonnet 4.5 on the Letta Platform, and use the new memory tool with any model. Although Sonnet 4.5 is specifically post-trained to the memory tool, we’ve found that other non-Anthropic models also work well with the tool.

.png)