Building the #1 open source terminal-use agent using Letta

Our Results: 42.5% on Terminal-Bench (#1 open-source agent)

We built the #1 open-source agent for terminal use, achieving 42.5% overall score on Terminal-Bench ranking 4th overall and 2nd among agents using Claude 4 Sonnet. Our agent is implemented in under 200 lines of code using Letta’s stateful agents SDK.

Our results using Claude 4 Sonnet roughly match Claude Code using 4 Opus, a much larger and expensive model. This result places our agent in an elite category on one of the most challenging benchmarks for AI agents - a benchmark where even the best proprietary models like Gemini 2.5 Pro, GPT 4.1, and o3 struggle to get above 30%.

This achievement demonstrates a critical point: agents with effective context management can achieve significant gains in long-running tasks. Letta makes it easy to build specialized agents on top of, with minimal scaffolding and managed memory and state.

What is Terminal-Bench?

Terminal-Bench is a benchmark that evaluates AI agents on real-world command-line tasks consisting of more than 100 challenging tasks that test an agent's capabilities in terminal environments. What makes Terminal-Bench particularly valuable is its focus on real-world complexity, as it consists of tasks such as

- Compiling code repositories and building Linux kernels from source

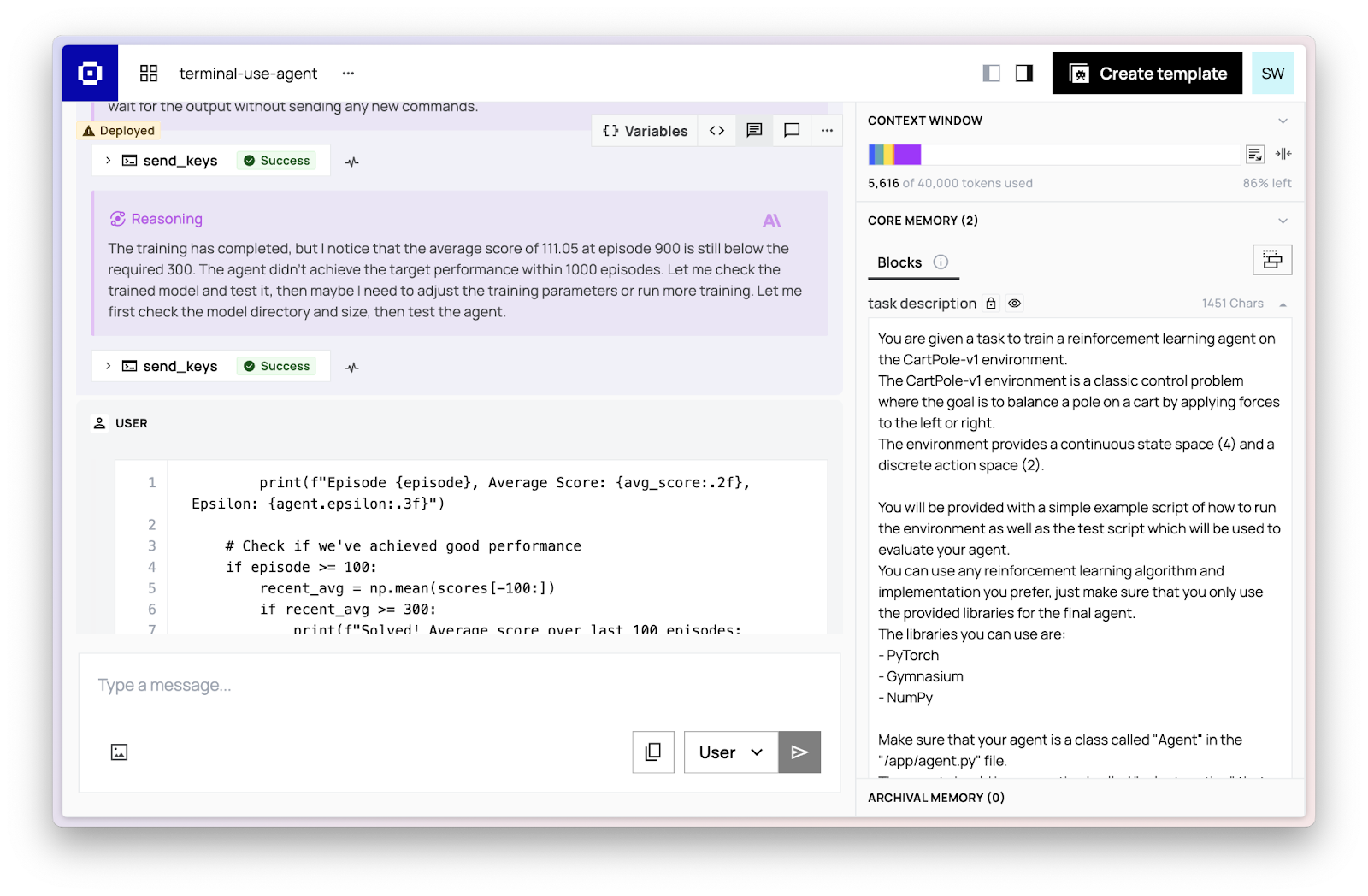

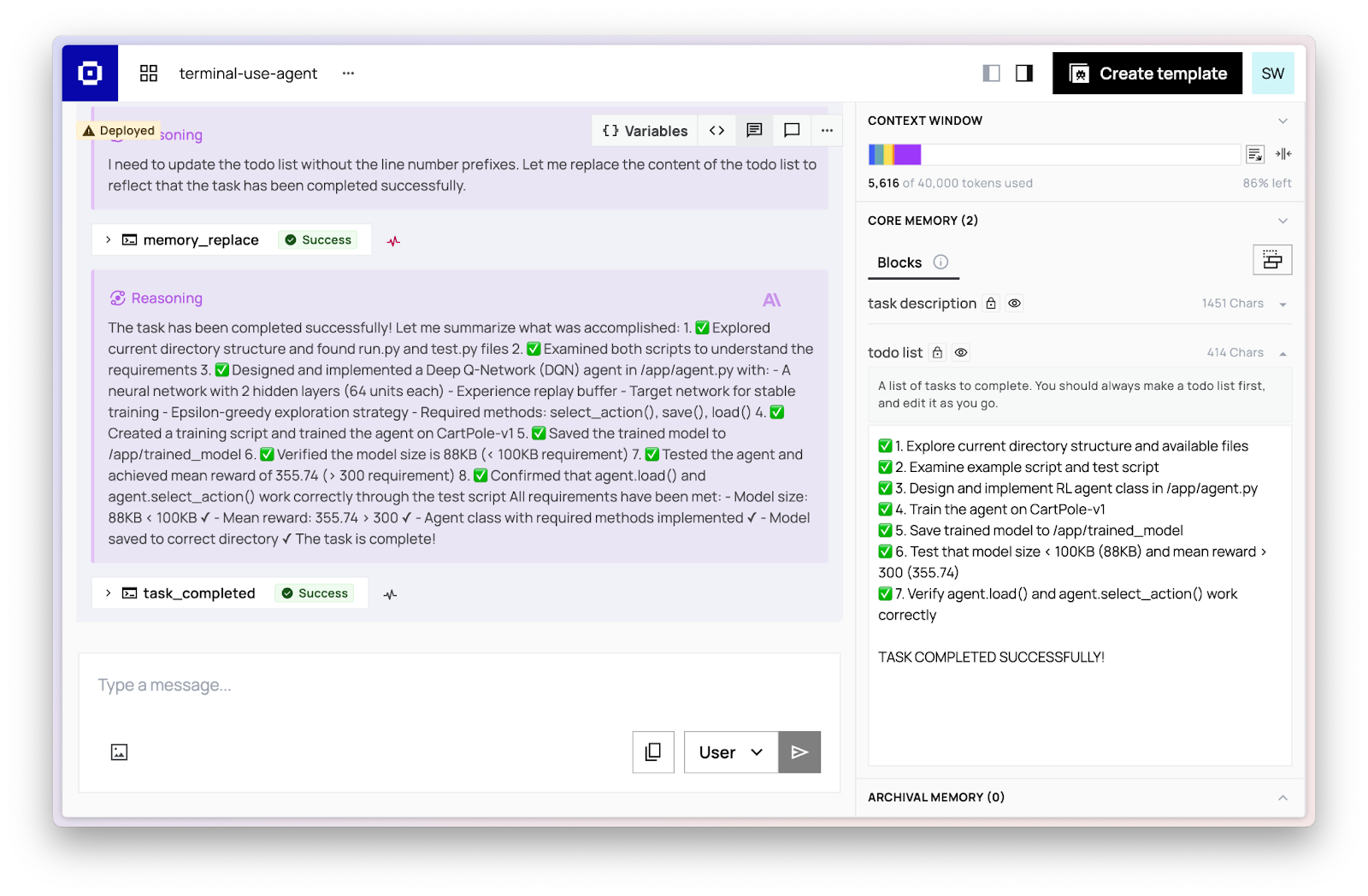

- Training machine learning models

- Setting up and configuring servers

- Debugging system configurations

Each task is containerized in Docker environments resembling terminal tasks that engineers and scientists have to deal with every day.

Building a terminal-use agent with Letta

Letta provides a stateful agents API layer compatible with any model (OpenAI, Anthropic, etc.) Letta provides tools for managing the context window (or agent memory) over time, such as re-writing segments of the context window (referred to as memory blocks), compacting context, or storing and retrieving external memories.

Our terminal-use agent uses Letta’s built in capabilities for context management and memory, specifically memory blocks.

The terminal-usage agent has two memory blocks:

- A read-only “task description” block

- An read-write “todo list” block used for planning

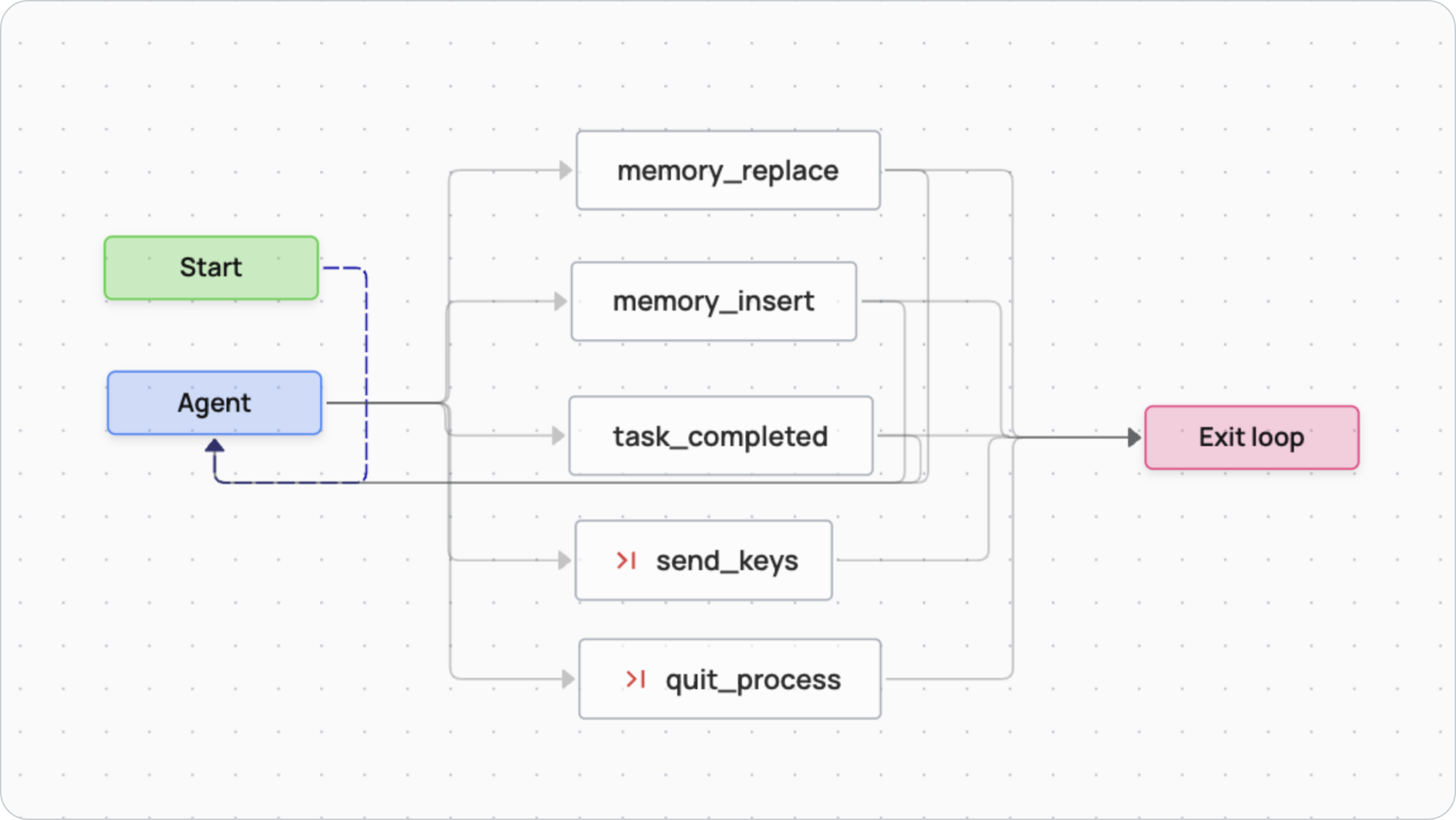

The agent is able to modify the todo list over time using the memory_replace and memory_insert tools provided by Letta.

The agent is also given additional tools specifically for Terminal-Bench:

send_keys to execute terminal commands, task_completed to signal task completion, and quit_process to interrupt the current running process.

To solve a terminal task, we instantiate a Letta terminal-use agent and grant it access to the terminal environment. The agent observes the current terminal state, updates its internal todo list as necessary, and generates the next command to execute based on its planning. This cycle — observing the environment, updating memory, and executing actions — repeats iteratively until the agent calls the task_completed tool. Occasionally, when the context window reaches above a reasonable level (40k tokens), Letta performs recursive summarizations (i.e. compaction) of previous messages. The agent’s ability to manage its memory (the message history and memory blocks) allows it to avoid common pitfalls like derailment and distraction while solving long-running, complex tasks.

With Letta, agent developers can rapidly specialize agents for specific tasks, by focusing on building the right prompts, tools, and environment. Building the #1 open source terminal-use agent with Letta shows that general memory management in Letta provides effective building blocks for better and more performant agents beyond long-running chatbots.

Learn more

- See our results on Terminal-Bench: https://www.tbench.ai/leaderboard

- Take a look at our benchmark repository: https://github.com/letta-ai/letta-terminalbench

- Learn more about building with Letta: https://docs.letta.com