Rearchitecting Letta’s Agent Loop: Lessons from ReAct, MemGPT, & Claude Code

A common definition for agents is that they're simply a loop that calls an LLM and executes a tool. In other words (as Simon Willison defines an agent):

An LLM agent runs tools in a loop to achieve a goal.

But not all loops are created equal. The underlying design of the agent loop fundamentally shapes how your agent behaves: when it decides to reason, when to terminate, and whether it gets stuck in infinite loops or exits prematurely.

What is agent architecture?

The performance of your agents depends not just on the model and prompts, but the agent architecture: exactly how the LLM is invoked in a loop to reason and take actions. At each iteration, the agent must decide to do one or more of the following:

- Call a tool (e.g., an MCP tool) or send a message

- Reason (spend compute cycles thinking)

- Terminate (exit the loop)

Each of these decisions can be controlled by the LLM itself (e.g. the LLM deciding to reason) or by the agent architecture controlling invocations to the LLM. Historically, LLMs could only specify tool calls or assistant messages explicitly, so additional logic from the agent architecture was necessary to trigger reasoning or make termination decisions. While modern LLMs often have reasoning capabilities built-in, they still lack a built-in way to determine whether to continue executing — this remains the responsibility of the agent architecture.

Early agent architectures: ReAct and MemGPT

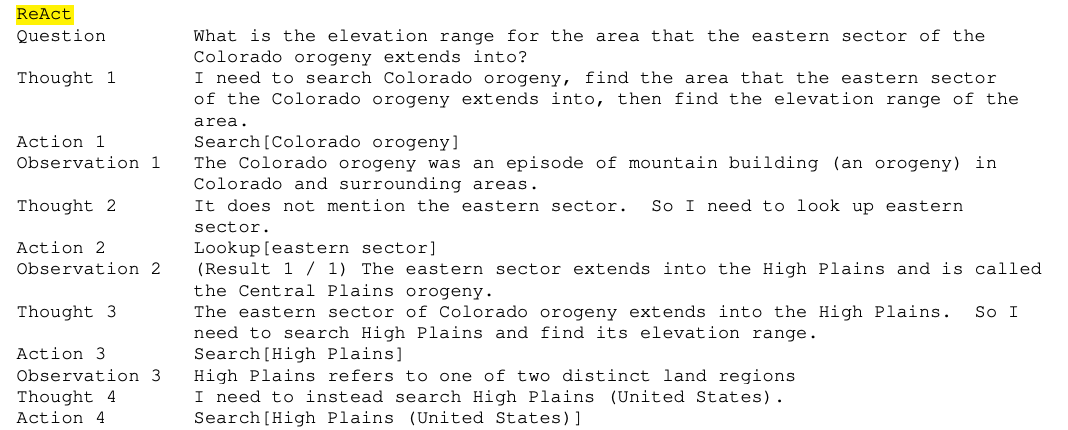

ReAct: Sequential reasoning and actions

ReAct was an early and influential LLM-based agent that combined reasoning and actions in sequence to achieve tasks. In the ReAct architecture, the LLM would be prompted to generate (in each response) a thought, an action, and (optionally) a token indicating the loop should be terminated.

Since the ReAct architecture pre-dated tool calling and native reasoning in LLMs, generation of actions, thoughts, and stop tokens were all done through formatted prompts.

MemGPT: The first stateful agent

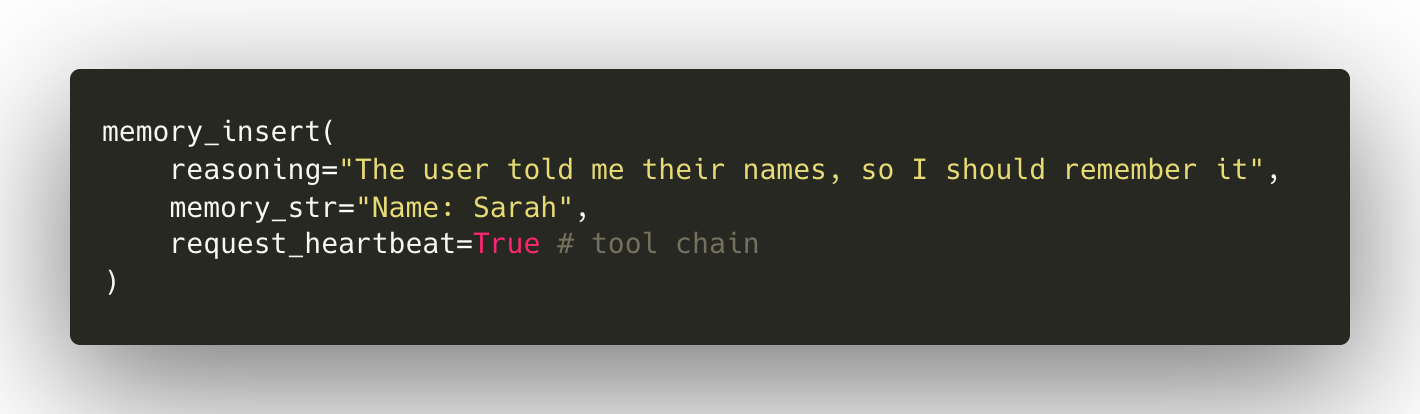

MemGPT was another early agent architecture and notably the first example of a stateful agent with persistent memory. MemGPT's architecture was built on top of early LLM tool calling, making every action a tool call (including assistant message, via a special send_message tool).

This design enabled the tool schemas to manage reasoning and control flow by injecting special keyword arguments into tools:

thinking: The model's reasoning or chain-of-thought (similar to “thoughts” in ReAct)request_heartbeat: Request tool chaining to continue execution

Each time the LLM invoked a tool, it would also be triggered to reason and determine whether the tool chain should continue. This allowed for agentic tool chaining and chain-of-thought reasoning before it was built into base models.

By default, request_heartbeat is set to False in MemGPT, so agents terminate by default rather than continue by default as in ReAct. The tool-based architecture also enabled powerful features to be built on top of the MemGPT agent loop such as tool rules, where tool calling patterns could be forced to follow specific constraints. However, MemGPT’s reliance on tool calling meant that only models that were capable of reliable tool-calling could work with MemGPT.

Although both ReAct and MemGPT are technically just “LLMs in a loop”, the way the underlying LLM is used differs significantly and results in different agent behaviors.

Agent architectures today: Letta, Claude Agents SDK, and OpenAI Codex

Agents are far more pervasive today than in 2023 when MemGPT was first released. Most models now support tool calling and native reasoning (reasoning tokens generated “directly” by the model rather than through prompting). LLM providers themselves now offer pre-built agents like OpenAI Codex and Claude Agents SDK, and have trained their models to be optimized to their own preferred agent architecture design choices.

Today's LLMs are also trained to be adept at agentic patterns such as multi-step tool calling, reasoning interleaved within tool calling, and self-directed termination conditions. As models become more heavily post-trained on new agentic patterns, agent architectures benefit from converging to match these patterns — in other words, staying "in-distribution" relative to the data the LLM was trained on.

Letta's new agent architecture

At Letta, we're transitioning from the previous MemGPT-style architecture to a new Letta V1 architecture (letta_v1_agent) that follows these modern patterns. In this architecture, heartbeats and the send_message tool are deprecated. Only native reasoning and direct assistant message generations from the models are supported.

What this means in practice

We’ve found the letta_v1_agent architecture significantly improves performance for the latest models like GPT-5 and Claude 4.5 Sonnet, with other benefits such as:

- Full support for native reasoning: The new architecture uses Responses API under the hood for OpenAI, and handles encrypted reasoning across providers. This allows models to fully leverage their reasoning capabilities to achieve frontier performance.

- Compatibility with any LLM: Tool calling support is no longer a requirement to connect an LLM to Letta.

- Simpler base system prompt: Understanding of the agentic control loop is baked into the underlying models.

At the same time, there are some downsides compared to the previous MemGPT architecture:

- No direct support for prompted reasoning: Non-reasoning models (e.g., GPT-5 mini, GPT-4o mini) will no longer generate reasoning, and developers cannot mutate the native reasoning state or pass reasoning traces across different models (from closed APIs that encrypt the reasoning).

- No heartbeats: Agents no longer understand the concept of heartbeats (unless implemented manually). For repeatedly triggering agents to run independently (e.g., processing time or sleep-time compute), you'll need custom prompting to explain this environment to the agent

- More limited tool rules: Tool rules cannot be applied to

AssistantMessage, as it is no longer a tool call.

The reasoning token dilemma

Reasoning is now built into models themselves, but the major frontier AI providers (OpenAI, Anthropic, Google Gemini) actively prevent developers from accessing and controlling this reasoning. One advantage of prompted reasoning tokens is ownership: you can see them, modify them, and send them to any model. In contrast, “native reasoning” tokens are often not transparent (the developer can only see a summary), and immutable (the developer is not allowed to modify them without voiding the API request). The performance difference between native reasoning and prompted reasoning remains unclear and likely varies by use case.

We'll continue supporting the original MemGPT agent architecture, but recommend using the new Letta V1 architecture for the latest reasoning models like GPT-5 and Claude 4.5 Sonnet.

Conclusion

Fully leveraging the underlying model capabilities to achieve frontier performance requires careful architecting of the underlying agentic loop and agent architecture, and adapting them alongside evolutions in model development. To see what agents are capable of today, try the latest agent architecture on the Letta Platform.

.png)