Introducing Recovery-Bench: Evaluating LLMs' Ability to Recover from Mistakes

"The only real mistake is the one from which we learn nothing." - Henry Ford

Long-lived agents will eventually make mistakes and need to recover. For example, a coding agent might implement the wrong solution, or a personal assistant may misunderstand instructions and execute an incorrect sequence of actions. In these cases, it's crucial that agents can recover (or ideally learn) from their mistakes, rather than suffer permanent performance degradation due to context pollution.

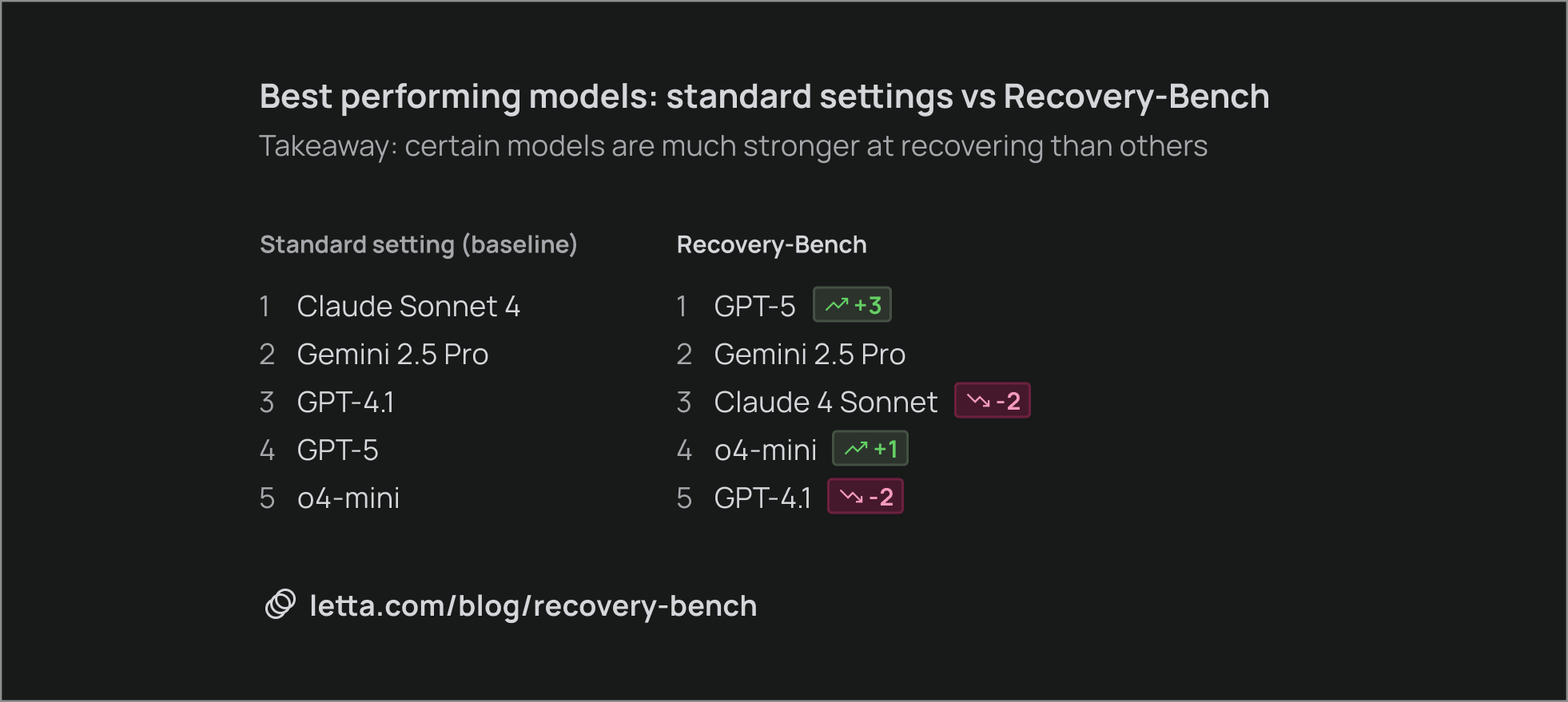

Today we're excited to announce Recovery-Bench, a benchmark and evaluation method for measuring how well agents can recover from errors and corrupted states. We find that the best-performing models for long-term performance differ from the top performers in fresh states, with GPT-5 showing an especially significant improvement in ranking.

Background

Current agents excel at short-horizon tasks like small bug fixes, but their ability to independently execute complex long-horizon tasks remains limited. With long-horizon tasks, even the most capable frontier models will inevitably make errors at some point in their trajectory. Yet existing agent benchmarks evaluate agents starting from fresh environments. For example, coding agents are usually evaluated starting from a clean repository and GitHub issue. But in reality, coding agents work in messy environments containing files and edits from previous attempts to solve the task, erroneous reasoning traces from debugging sessions, and other artifacts.

Recovery-Bench is a new benchmark that bridges this gap by evaluating agents in more realistic environments that include existing trajectories from previous attempts. Failed trajectories represent opportunities for agents to learn from their mistakes, but our research reveals a counterintuitive finding: the best AI models today are often distracted or misled by failed trajectories in their context, suffering from context pollution.

Context pollution refers to the leftover artifacts of an agent’s failure. It can include erroneous actions, misleading reasoning traces, or corrupted environment states that persist into an agent’s working context. For instance, a coding agent might inherit a broken function or misapplied patch from an earlier attempt, leading it to compound errors rather than correct them.

Our results highlight that recovery represents a distinct capability from performance in fresh states. Claude 4 Sonnet, which tops standard evaluations, drops in rank under recovery conditions, while GPT-5 moves ahead—showing that resilience to context pollution is not correlated with raw problem-solving strength.

Building Recovery-Bench

Recovery-Bench provides a general and simple methodology for measuring agent recovery: how well an agent can successfully complete a task by recovering from previous failures.

We select a long-horizon task and ask a weaker agent to solve it in the standard setting with a clean environment. We then filter the resulting trajectories (the full sequences of actions an agent takes and the environment states those actions produce), keeping only those where the agent failed to complete the task. Finally, we evaluate how well other agents can recover by initializing them with the actions and environment left behind by these failed trajectories.

Recovery-Bench-v0 uses tasks from Terminal-Bench, a challenging benchmark for measuring agents' capabilities at using the terminal. We first collect trajectories from gpt-4o-mini, then evaluate a variety of models (GPT-5, Gemini 2.5 Pro, Claude 4 Sonnet, o4-mini, GPT-4.1) on agent recovery tasks.

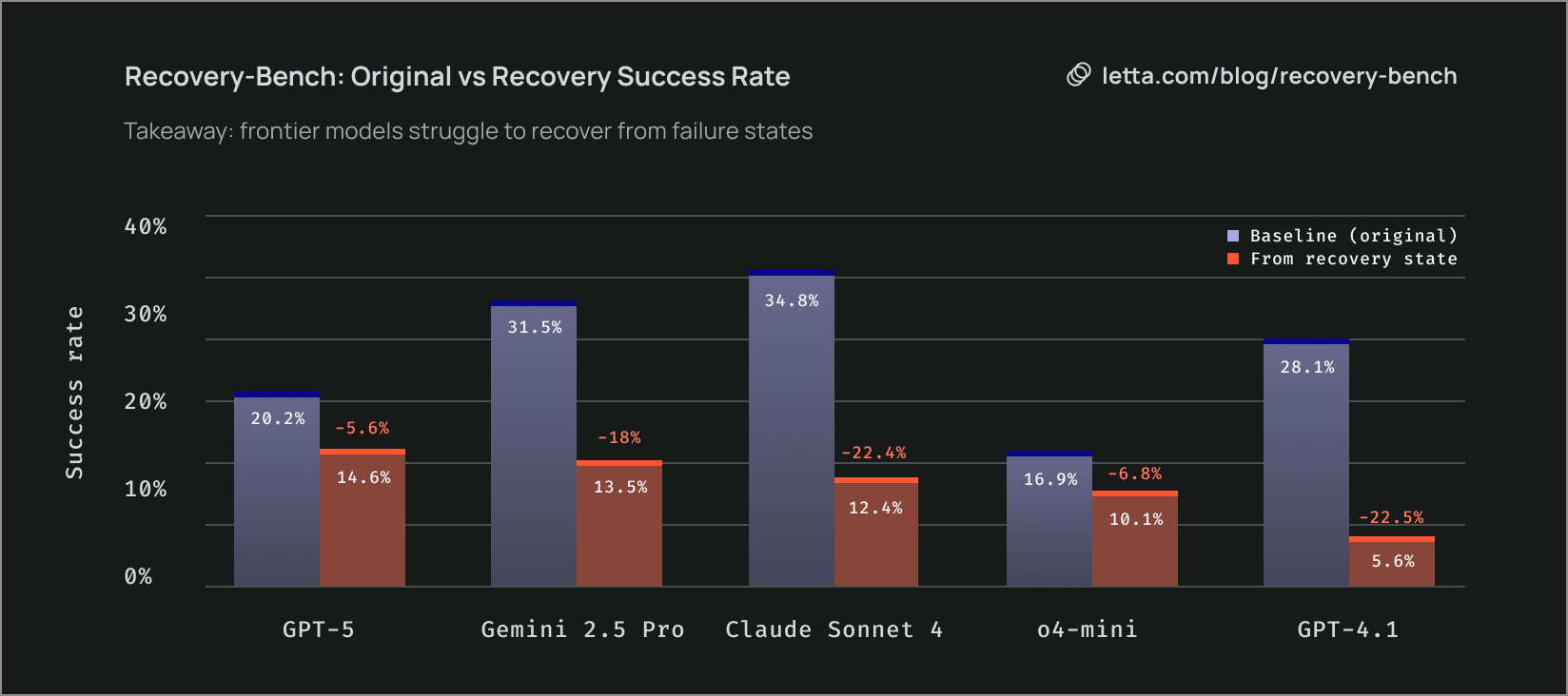

How Challenging Is Recovery?

Agent recovery tasks are challenging even for the strongest models. In the original Terminal-Bench setting, models scored an average of 26.3%, with the best model, Claude 4 Sonnet, reaching 34.8%. On Recovery-Bench, models score an average of only 11.2%. Compared to their performance on the original Terminal-Bench, they show a 57% relative decrease in accuracy.

Is Agent Recovery a Standard Capability?

We show that the rankings on Recovery-Bench differ from standard Terminal-Bench settings, indicating that recovery represents an orthogonal capability. For example, Claude 4 Sonnet achieves the highest Terminal-Bench score at 34.8% but ranks third on Recovery-Bench, while GPT-5 achieves only 20.2% on the original Terminal-Bench but ranks first on Recovery-Bench. Interestingly, o4-mini has the lowest Terminal-Bench score but performs significantly better than GPT-4.1 on agent recovery.

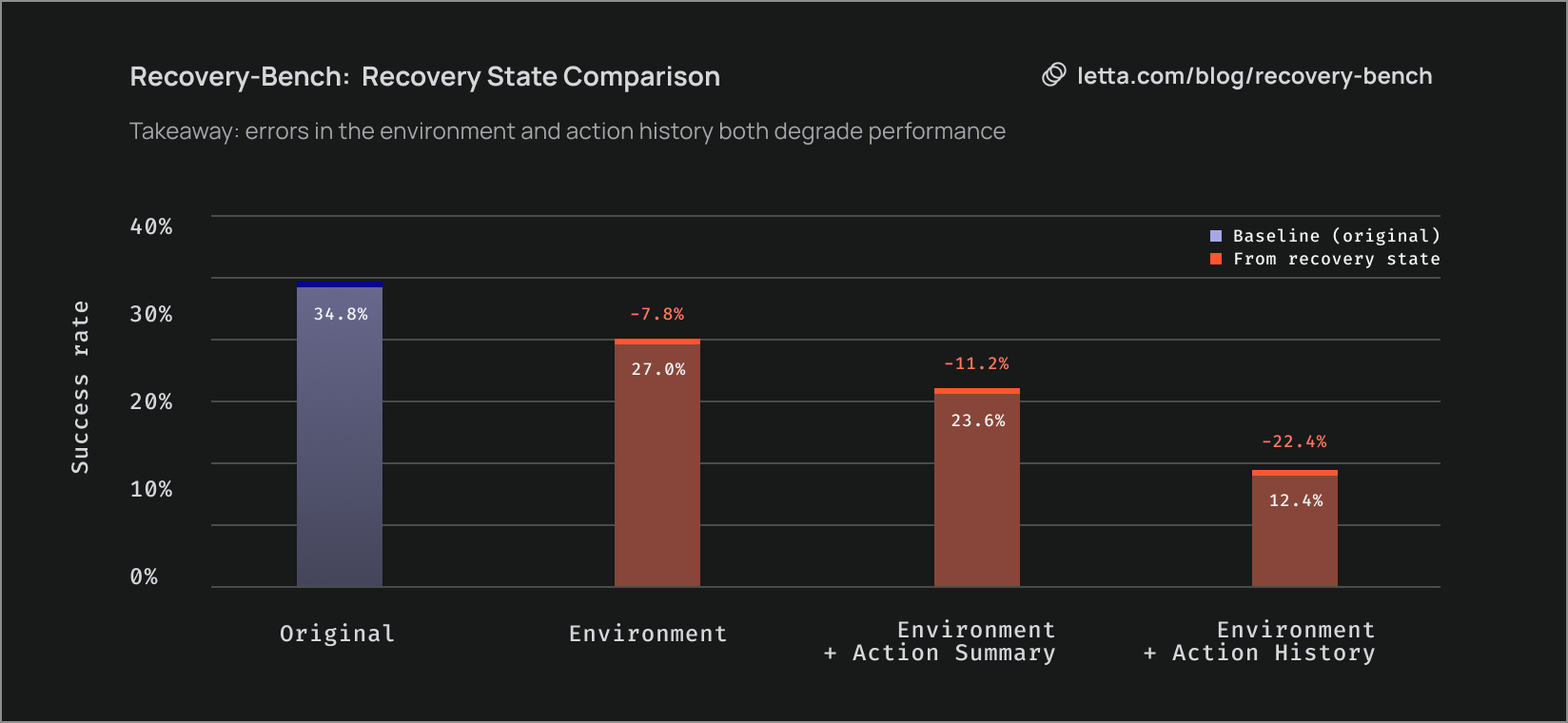

What's in a Recovery State?

Recovery states contain two distinct components: environments and action histories. In Terminal-Bench, actions correspond to commands sent to the terminal (e.g., ls) and the resulting terminal states. In realistic settings, agents need to recover from both the modified environment and erroneous actions present in their context.

To better understand what makes Recovery-Bench challenging, we study three different settings that initialize the recovery agent with different components:

- Environment: The environment is set to the state after a failed agent trajectory, but the new agent receives no additional context about the initial trajectory

- Environment + Action Summary: In addition to the environment, the agent receives a summary of the previous actions

- Environment + Action History: In addition to the environment, the agent receives the full actions from the initial failed trajectory

In our benchmark results, we observe that providing just the environment already significantly degrades agent performance compared to the original setting. Giving the agent both the environment and action summary further degrades performance. Interestingly, providing the full action history yields the worst performance, showing that displaying the actions directly doesn't immediately help resolve errors in the environment. Taken together, our results show that Recovery-Bench's challenge stems from both recovering from erroneous actions affecting the environment and managing context pollution from errors in the action history.

Future Work

Our initial results make it clear that today’s frontier models lack the ability to naturally recover from failed states, a key ingredient for continual learning. Our research also suggests that agents may benefit from using different models at different points within a single trajectory, as well as robust context management systems (like those in Letta) that enable the agent to learn from failed trajectories rather than be distracted by them.

We also plan to expand the current methodology for building Recovery-Bench to include tasks beyond Terminal-Bench, including SWE-Bench and other agent benchmarks, to more broadly understand how agents recover. We expect to observe different phenomena in domains beyond terminal use.

At Letta, we are building self-improving, perpetual agents that can learn from experience and adapt over time. If you’re excited by our mission and want to contribute towards work like Recovery-Bench, apply to join our team.

Learn More

- GitHub repository: https://github.com/letta-ai/recovery-bench

- Building stateful agents with Letta: https://docs.letta.com

- Letta Platform: https://app.letta.com

.png)